RISC versus CISC Wars in the PrePC and PC Eras - Part 1

This two-part blog gives a historical perspective on the ARM vs. 80x86 instruction set competition for three eras: PrePC (late 1970s/early 1980s), PC (mid 1980s to mid 2000s), and PostPC (late 2000s onward).Round 1: The Beginning of Reduced vs. Compl...

This two-part blog gives a historical perspective on the ARM vs. 80x86 instruction set competition for three eras: PrePC (late 1970s/early 1980s), PC (mid 1980s to mid 2000s), and PostPC (late 2000s onward).

Round 1: The Beginning of Reduced vs. Complex Instruction Set Computers

The first round of the RISC-CISC Wars started 30 years ago with the publication of "The Case for the Reduced Instruction Set Computer" [1] and the companion piece "Comments on "The Case for the Reduced Instruction Set Computer"[2]. We argued then that an instruction set made up of simple or reduced instructions using easy-to-decode instruction formats and lots of registers was a better match to integrated circuits and compiler technology than the instructions sets of the 1970s that featured complex instructions and formats. Our counterexamples were the Digital VAX-11/780, the Intel iAPX-432, and the Intel 8086 architectures, which we labeled Complex Instruction Set Computers (CISC).

I recently found an old set of hand-drawn slides from 1981, one of which shows the simple instructions and formats of the Berkeley RISC architecture.

Benchmarking Pipelined Microprocessors versus Microcoded Microprocessors

To implement their more sophisticated operations, CISCs relied on microcode, which is an internal interpreter with its own program memory. RISC advocates essentially argued that these simpler internal instructions should be exposed to the compiler rather than buried inside an interpreter within a chip.

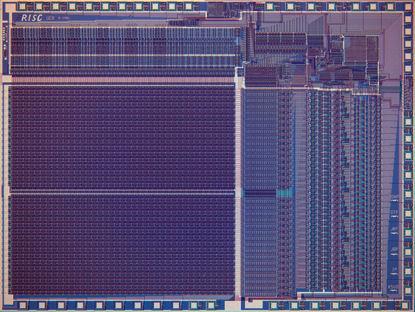

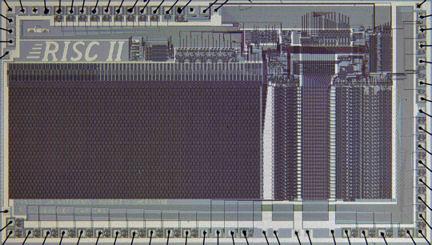

RISC architects took advantage of the simpler instruction sets to first demonstrate pipelining and later superscalar execution in microprocessors, both of which had been limited to the supercomputer realm. Below are die photos of Berkeley's RISC I and RISC II, which were single-issue, five-stage pipelined, 32-bit microprocessors.

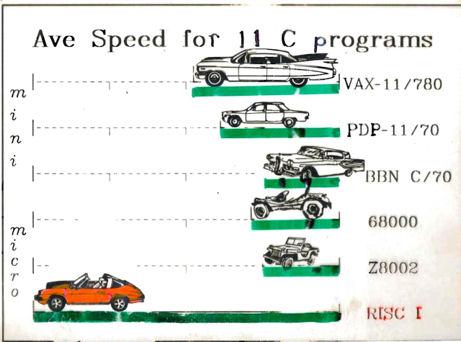

The chart below was scanned from a 1981 slide I used to summarize the Berkeley RISC results, using different cars to indicate complexity. First car wins! (The top three cars were minicomputers. The lack of a flat address space in the 8086, at the time it was hard to find a C compiler and UNIX system to benchmark the 8086.)

It's still amazing to me that there was a time when graduate students could build a prototype chip that was actually faster than what industry could build.

ARM, MIPS, and SPARC successfully demonstrated the benefits of RISC in the marketplace of the 1980s with rapidly increasing performance that kept pace with the rapid increase in transistors from Moore's Law.

Round 2: Intel Responds and Dominates in the PC Era

Although the VAX and 432 succumbed to the RISC pressure, Intel x86 designers rose to the challenge. Binary compatibility demanded that they keep the complex instructions, so they couldn't abandon microcode. However, as RISC advocates pointed out, the most frequently executed instructions were simple instructions. Intel's solution was to have hardware translate the simple x86 instructions into internal RISC instructions, and then use whatever ideas RISC architects demonstrated with these internal simple instructions: pipelining, superscalar execution, and so on. While Intel paid a "CISC tax" with longer pipelines, extra translation hardware, and the microcode burden of the complex operations:

- Intel's semiconductor fabrication line was ahead of what RISC companies could use, so the smaller geometries could hide some of the CISC Tax;

- As Moore's Law led to on-chip integration of floating-point units and caches, over time the CISC Tax became a smaller fraction of the chip;

- The increasing popularity of the IBM PC combined with distribution of software in binary format made the x86 instruction set increasingly valuable, no matter what the tax.

Wikipedia's conclusion of Round 2 of the RISC-CISC war is "While early RISC designs were significantly different than contemporary CISC designs, by 2000 the highest performing CPUs in the RISC line were almost indistinguishable from the highest performing CPUs in the CISC line."

Given the value of x86 software and eventual performance parity, CISC monopolized the desktop in the PC era.

Conclusion

While RISC became commercially successful in Round 1, to its credit Intel responded by leveraging Moore's Law to maintain binary compatibility with PC software and embrace RISC concepts by translating to RISC instructions internally. The CISC tax was a small price to pay for the PC market.

In my next blog, we'll examine RISC-CISC Wars in the current Post-PC Era, which is based on Cloud Computing and Personal Mobile Devices like smart phones and tablets.

_________________________________________________________

[1] D. A. Patterson and D. R. Ditzel, "The Case for the Reduced Instruction Set Computer," ACM SIGARCH Computer Architecture News, 8: 6, 25-33, Oct. 1980.

[2] D. W. Clark and W. D. Strecker, "Comments on "The Case for the Reduced Instruction Set Computer", ibid, 34-38, Oct. 1980.

Re-use is only permitted for informational and non-commercial or personal use only.