Arm KleidiAI: Helping AI frameworks elevate their performance on Arm CPUs

Learn all about Arm KleidiAI, an open-source library for accelerating AI performance on any AI framework. KleidiAI is designed for easily integrating optimized routines for Arm CPUs.

Arm KleidiAI

At Computex 2024, we proudly unveiled KleidiAI, our groundbreaking software library designed to elevate artificial intelligence (AI) performance on Arm CPUs. The name KleidiAI comes from the Greek word "kleidi," meaning key, signifying its crucial role in advancing AI capabilities on Arm CPUs. In developing this project, we are closely considering the needs of our framework developers to provide an open-source library that is compact, impactful, and easy to adopt into any AI framework.

Although KleidiAI is still in its early stages, it has already helped the Google Mediapipe and XNNPACK teams to boost the performance of the Gemma open large language model (LLM) by 25%.

As you can see from the video, our virtual assistant powered by the Gemma model generates responses instantly by running the LLM locally on Arm Cortex-A715 CPUs.

Our ambitious goal is to make KleidiAI more than just a collection of AI-optimized routines, though. In fact, we want to make this project a knowledge repository for learning best practices in software optimization on Arm CPUs. Therefore, we invite developers to join us on this exciting learning journey from this very beginning and contribute feedback to enhance our product further together.

In this blog post, we will explore KleidiAI's initial features and provide a link to a step-by-step guide to running one of its critical functions for accelerating the Gemma LLM: the integer 4-bit matrix-multiplication routine.

In the following subsection, we will examine what you find in the library: the micro-kernels.

Micro-kernels

KleidiAI is an open-source library for AI framework developers that provides optimized performance-critical routines for Arm® CPUs.

These routines are called micro-kernels, or ukernels, which are defined as a near-minimum amount of software required to accelerate a given operator with high performance.

Take, for instance, the 2D convolution operator executed through the Winograd algorithm. This computation involves four primary operations:

- Winograd input transform

- Winograd filter transform

- Matrix multiplication

- Winograd output transform

Each of the preceding operations is a micro-kernel.

However, why are the preceding operations not called kernels or functions instead?

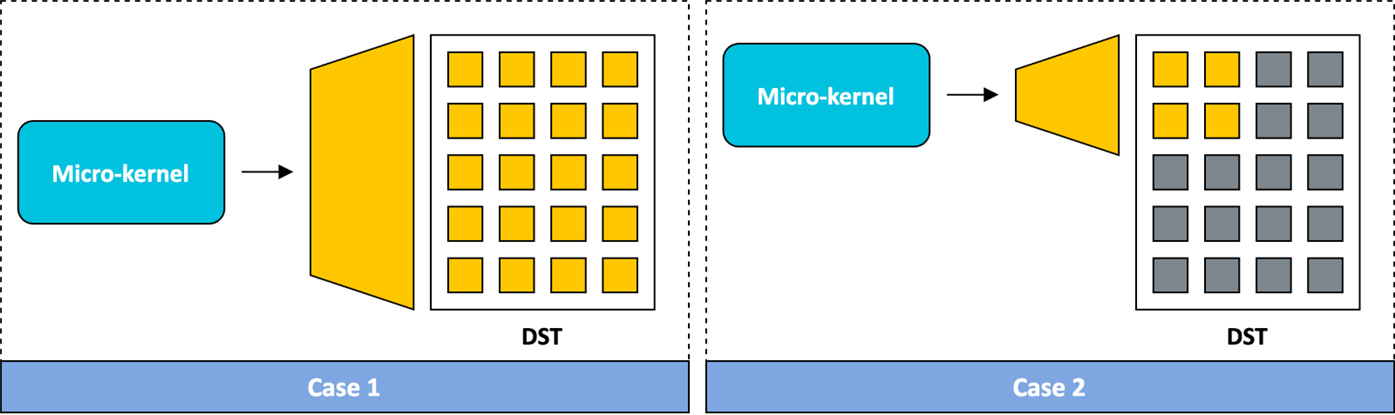

The term micro-kernel highlights their capability to process portions of the output tensor, as shown in the following image:

As illustrated in Case 1 and 2 in the previous image, the micro-kernel is capable of processing only a portion of the entire output.

This design decision allows for fine-grained optimization, for example offering the flexibility to cascade multiple micro-kernels efficiently, thereby elevating the performance of AI frameworks to the next level.

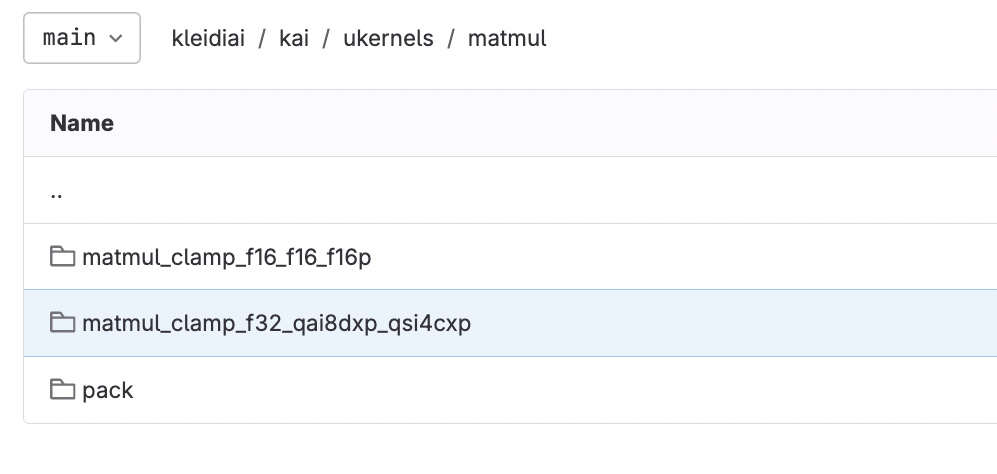

A micro-kernel is available for various Arm® architectures, technologies, and computational parameters. For example, take a look at the Int4 matrix multiplication routine with per-channel quantization held in the matmul_clamp_f32_qai8dxp_qsi4cxp folder:

This folder contains the key LLM micro-kernels for the integer 4-bit matrix multiplication. Inside the folder, you will find micro-kernels optimized with Arm dotprod or i8mm extensions using Neon assembly to maximize efficiency, along with multiple variants that differ regarding the minimum output block processed.

Each .c and .h file pair in the folder represents a micro-kernel variant. These variants are distinguished by computational parameters (such as block size), the Arm® technology used (such as Arm® Neon ), and the specific Arm® architecture feature exploited (such as FEAT_DotProd).

All micro-kernel variants share the same functions and interface, ensuring consistency. While consistency is a critical factor for ease of adoption, what other measures have we taken to help our framework developers integrate our micro-kernels effortlessly?

Ease of adoption

We understand the challenges of integrating a new library in AI frameworks, such as library size, external dependencies, and documentation. That is why we have gone the extra mile to gather feedback from our partners and incorporate it into our vision, ensuring the integration process is as seamless as possible.

Are you curious how we achieved this? The design principle of KleidiAI focuses on enabling framework developers to easily integrate the micro-kernels they need. Each micro-kernel can be integrated by simply pulling the corresponding .c and .h files, along with a common header file (kai_common.h) shared by all micro-kernels. We refer to this as the "three-file micro-kernel dependency." That’s all it takes.

The best way to demonstrate the use of our micro-kernels is to follow the guide we have prepared for you available here. The guide explores the usage of the integer 4-bit matrix multiplication micro-kernel, which contributed to enhancing Gemma LLM's performance.

That’s all for this blog post, but you can also check out the details for Arm KleidiCV, a similar library of optimized routines for integrating into any Computer Vision framework or stay tuned to our Gitlab project for more tutorials and blogs to come.

A presto!

Gian Marco

Re-use is only permitted for informational and non-commerical or personal use only.